Google Translate Web. Translate web pages to and from more than 100 languages. URL of web page to translate.

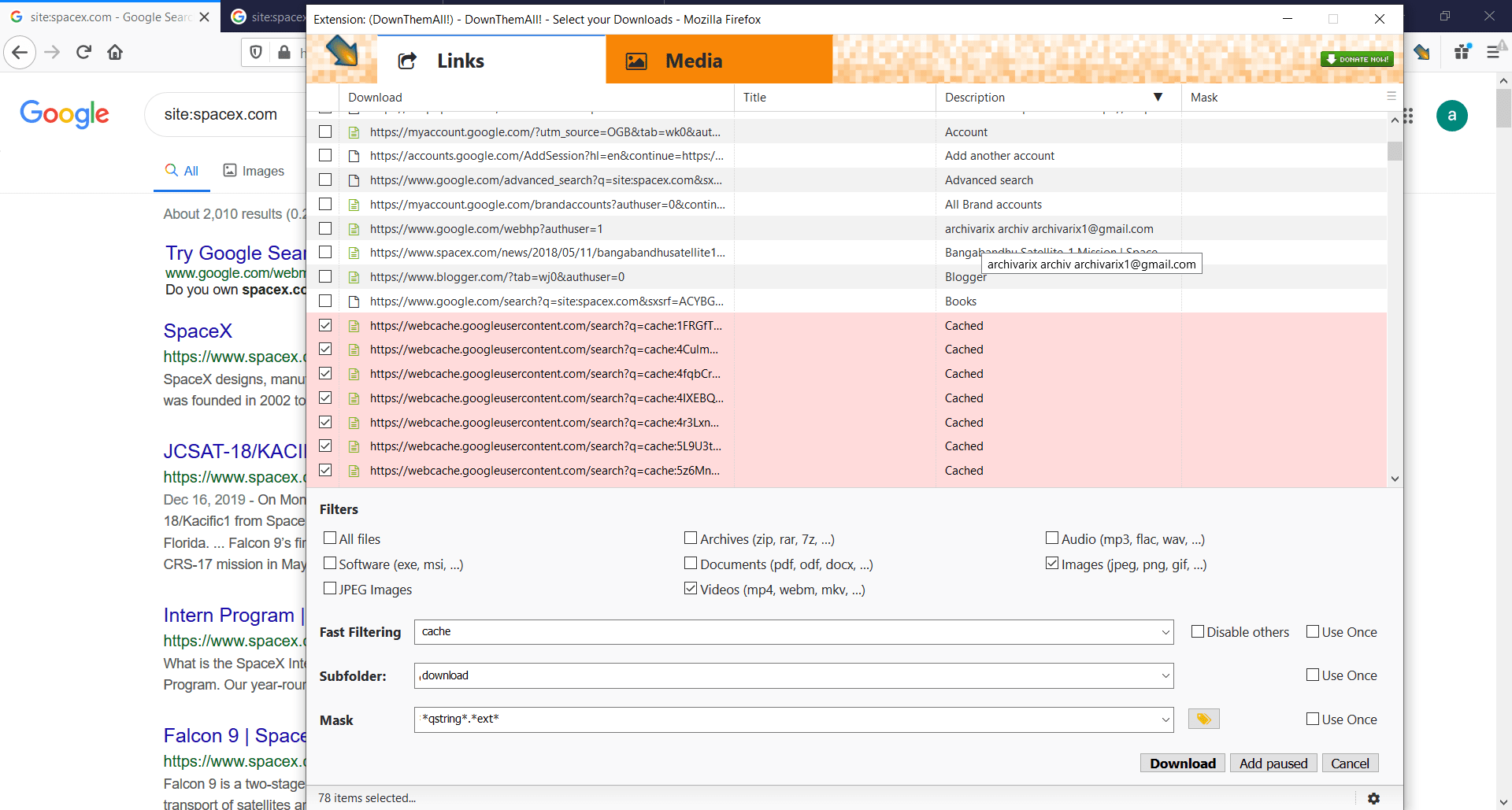

There will be times when you need access to a website when you do not have access to the internet. Or, you want to make a backup of your own website but the host that you are using does not have this option. Maybe you want to use a popular website for reference when building your own, and you need 24/7 access to it. Whatever the case may be, there are a few ways that you can go about downloading an entire website to view at your leisure offline. Some websites won't stay online forever, so this is even more of a reason to learn how to download them for offline viewing. These are some of your options for downloading a whole website so that it can be viewed offline at a later time, whether you are using a computer, tablet, or smartphone. Here are the best Website Download Tools for downloading an entire website for offline viewing.

Translating a website does not merely involve translating all the text on the current site but also includes some technical areas and website management, marketing research, text and image optimization. So, how to translate an entire website properly? Let's go through these stages and explain them particularly. Step 1: Form a team. Website Download Tools. This free tool enables easy downloading for offline viewing. It allows the user to download a website from the internet to their local directory, where it will build the directory of the website using the HTML, files, and images from the server onto your computer.

1. HTTrack

This free tool enables easy downloading for offline viewing. It allows the user to download a website from the internet to their local directory, where it will build the directory of the website using the HTML, files, and images from the server onto your computer. HTTrack will automatically arrange the structure of the original website. All that you need to do is open a page of the mirrored website on your own browser, and then you will be able to browse the website exactly as you would be doing online. You will also be able to update an already downloaded website if it has been modified online, and you can resume any interrupted downloads. The program is fully configurable, and even has its own integrated help system.

2. GetLeft

To use this website grabber, all that you have to do is provide the URL, and it downloads the complete website, according to the options that you have specified. It edits the original pages as well as the links to relative links so that you are able to browse the site on your hard disk. You will be able to view the sitemap prior to downloading, resume an interrupted download, and filter it so that certain files are not downloaded. 14 languages are supported, and you are able to follow links to external websites. GetLeft is great for downloading smaller sites offline, and larger websites when you choose to not download larger files within the site itself.

3. Cyotek Webcopy

This free tool can be used to copy partial or full websites to your local hard disk so that they can be viewed later offline. WebCopy works by scanning the website that has been specified, and then downloading all of its contents to your computer. Links that lead to things like images, stylesheets, and other pages will be automatically remapped so that they match the local path. Because of the intricate configuration, you are able to define which parts of the website are copied and which are not. Essentially, WebCopy looks at the HTML of a website to discover all of the resources contained within the site.

4. SiteSucker

This application is used only on Mac computers, and is made to automatically download websites from the internet. It does this by collectively copying the website's individual pages, PDFs, style sheets, and images to your own local hard drive, thus duplicating the website's exact directory structure. All that you have to do is enter the URL and hit enter. SiteSucker will take care of the rest. Essentially you are making local copies of a website, and saving all of the information about the website into a document that can be accessed whenever it is needed, regardless of internet connection. You also have the ability to pause and restart downloads. Apps for mac os x free download. Websites may also be translated from English into French, German, Italian, Portuguese, and Spanish.

5. GrabzIt

In addition to grabbing data from websites, it will grab data from PDF documents as well with the scraping tool. First, you will need to identify the website or sections of websites that you want to scrape the data from and when you would like it to be done. You will also need to define the structure that the scraped data should be saved. Finally, you will need to define how the data that was scraped should be packaged—meaning how it should be presented to you when you browse it. This scraper reads the website in the way that it is seen by users, using a specialized browser. This specialized browser allows the scraper to lift the dynamic and static content to transfer it to your local disk. When all of these things are scraped and formatted on your local drive, you will be able to use and navigate the website in the same way that if it were accessed online.

6. Telport Pro

This is a great all-around tool to use for gathering data from the internet. You are able to access and launch up to 10 retrieval threads, access sites that are password protected, you can filter files by their type, and even search for keywords. It has the capacity to handle any size website with no problem. It is said to be one of the only scrapers that can find every file type possible on any website. The highlights of the program are the ability to: search websites for keywords, explore all pages from a central site, list all pages from a site, search a site for a specific file type and size, create a duplicate of a website with subdirectory and all files, and download all or parts of the site to your own computer.

7. FreshWebSuction

This is a freeware browser for those who are using Windows. Not only are you able to browse websites, but the browser itself will act as the webpage downloader. Create projects to store your sites offline. You are able to select how many links away from the starting URL that you want to save from the site, and you can define exactly what you want to save from the site like images, audio, graphics, and archives. This project becomes complete once the desired web pages have finished downloading. After this, you are free to browse the downloaded pages as you wish, offline. In short, it is a user friendly desktop application that is compatible with Windows computers. You can browse websites, as well as download them for offline viewing. You are able to completely dictate what is downloaded, including how many links from the top URL you would like to save.

How to Download With No Program

There is a way to download a website to your local drive so that you can access it when you are not connected to the internet. You will have to open the homepage of the website. Need for speed most wanted mac torrent 2012. This will be the main page. You will right-click on the site and choose Save Page As. You will choose the name of the file and where it will download to. It will begin downloading the current and related pages, as long as the server does not need permission to access the pages.

Alternatively, if you are the owner of the website, you can download it from the server by zipping it. When this is done, you will be getting a backup of the database from phpmyadmin, and then you will need to install it on your local server.

Using the GNU Wget Command

Sometimes simply referred to as just wget and formerly known as geturl, it is a computer program that will retrieve content from web servers. As part of the GNU project, it supports downloads through HTTP, HTTPS, and FTP protocol. It allows recursive downloads, the conversion of links for offline viewing for local HTML, as well as support for proxies.

To use the GNU wget command, it will need to be invoked from the command line, while giving one or more URLs as the argument.

When used in a more complex manner, it can invoke the automatic download of multiple URLs into a hierarchy for the directory.

Mobile Options

How To Cite An Entire Website Mla

Can you recall how many times you have been reading an article on your phone or tablet and been interrupted, only to find that you lost it when you came back to it? Or found a great website that you wanted to explore but wouldn't have the data to do so? This is when saving a website on your mobile device comes in handy.

Offline Pages Pro allows you to save any website to your mobile phone so that it can be viewed while you are offline. What makes this different from the computer applications and most other phone applications is that the program will save the whole webpage to your phone—not just the text without context. It saves the format of the site so that it is no different than looking at the website online. The app does require a one-time purchase of $9.99. When you need to save a web page, you will just have to click on the button next to the web address bar. This triggers the page to be saved so that it can be viewed offline whenever you need. The process is so simple. In the Pro version of the app, you are able to tag pages, making it easier for you to find them later with your own organized system. To access the saved pages, in the app you will click on the button in the middle of the screen on the bottom. Here will be a list of all of your saved pages. To delete a page, simply swipe it and hit the button when the option to delete comes up. Or, you may use the Edit button to mark other pages to be deleted. In the Pro version, you can opt to have websites that you have saved to be automatically updated periodically, allowing you to keep all of your sites current for the next time that you go offline.

Read Offline for Android is a free app for Android devices. This application allows you to download websites onto your phone so that they can be accessed at a later time when you may be offline. The websites are stored locally on your phone's memory, so you will need to make sure that you have the proper storage available. In the end, you will have access to pages that are capable of being browsed quickly, just like if they were actually being accessed online. It is a user friendly app that is compatible with all Android devices, like smartphones or tablets. You will be downloading webpages directly to your phone, ideal for reading websites offline.

I can't stop thinking about this site. It looks like a pretty standard fare; a website with links to different pages. Nothing to write home about except that… the whole website is contained within a single HTML file.

What about clicking the navigation links, you ask? Each link merely shows and hides certain parts of the HTML.

Each

Entire Website Apa Citation

Each link in the main navigation points to an anchor on the page:

And once you click a link, the

Download Entire Website

3. Cyotek Webcopy

This free tool can be used to copy partial or full websites to your local hard disk so that they can be viewed later offline. WebCopy works by scanning the website that has been specified, and then downloading all of its contents to your computer. Links that lead to things like images, stylesheets, and other pages will be automatically remapped so that they match the local path. Because of the intricate configuration, you are able to define which parts of the website are copied and which are not. Essentially, WebCopy looks at the HTML of a website to discover all of the resources contained within the site.

4. SiteSucker

This application is used only on Mac computers, and is made to automatically download websites from the internet. It does this by collectively copying the website's individual pages, PDFs, style sheets, and images to your own local hard drive, thus duplicating the website's exact directory structure. All that you have to do is enter the URL and hit enter. SiteSucker will take care of the rest. Essentially you are making local copies of a website, and saving all of the information about the website into a document that can be accessed whenever it is needed, regardless of internet connection. You also have the ability to pause and restart downloads. Apps for mac os x free download. Websites may also be translated from English into French, German, Italian, Portuguese, and Spanish.

5. GrabzIt

In addition to grabbing data from websites, it will grab data from PDF documents as well with the scraping tool. First, you will need to identify the website or sections of websites that you want to scrape the data from and when you would like it to be done. You will also need to define the structure that the scraped data should be saved. Finally, you will need to define how the data that was scraped should be packaged—meaning how it should be presented to you when you browse it. This scraper reads the website in the way that it is seen by users, using a specialized browser. This specialized browser allows the scraper to lift the dynamic and static content to transfer it to your local disk. When all of these things are scraped and formatted on your local drive, you will be able to use and navigate the website in the same way that if it were accessed online.

6. Telport Pro

This is a great all-around tool to use for gathering data from the internet. You are able to access and launch up to 10 retrieval threads, access sites that are password protected, you can filter files by their type, and even search for keywords. It has the capacity to handle any size website with no problem. It is said to be one of the only scrapers that can find every file type possible on any website. The highlights of the program are the ability to: search websites for keywords, explore all pages from a central site, list all pages from a site, search a site for a specific file type and size, create a duplicate of a website with subdirectory and all files, and download all or parts of the site to your own computer.

7. FreshWebSuction

This is a freeware browser for those who are using Windows. Not only are you able to browse websites, but the browser itself will act as the webpage downloader. Create projects to store your sites offline. You are able to select how many links away from the starting URL that you want to save from the site, and you can define exactly what you want to save from the site like images, audio, graphics, and archives. This project becomes complete once the desired web pages have finished downloading. After this, you are free to browse the downloaded pages as you wish, offline. In short, it is a user friendly desktop application that is compatible with Windows computers. You can browse websites, as well as download them for offline viewing. You are able to completely dictate what is downloaded, including how many links from the top URL you would like to save.

How to Download With No Program

There is a way to download a website to your local drive so that you can access it when you are not connected to the internet. You will have to open the homepage of the website. Need for speed most wanted mac torrent 2012. This will be the main page. You will right-click on the site and choose Save Page As. You will choose the name of the file and where it will download to. It will begin downloading the current and related pages, as long as the server does not need permission to access the pages.

Alternatively, if you are the owner of the website, you can download it from the server by zipping it. When this is done, you will be getting a backup of the database from phpmyadmin, and then you will need to install it on your local server.

Using the GNU Wget Command

Sometimes simply referred to as just wget and formerly known as geturl, it is a computer program that will retrieve content from web servers. As part of the GNU project, it supports downloads through HTTP, HTTPS, and FTP protocol. It allows recursive downloads, the conversion of links for offline viewing for local HTML, as well as support for proxies.

To use the GNU wget command, it will need to be invoked from the command line, while giving one or more URLs as the argument.

When used in a more complex manner, it can invoke the automatic download of multiple URLs into a hierarchy for the directory.

Mobile Options

How To Cite An Entire Website Mla

Can you recall how many times you have been reading an article on your phone or tablet and been interrupted, only to find that you lost it when you came back to it? Or found a great website that you wanted to explore but wouldn't have the data to do so? This is when saving a website on your mobile device comes in handy.

Offline Pages Pro allows you to save any website to your mobile phone so that it can be viewed while you are offline. What makes this different from the computer applications and most other phone applications is that the program will save the whole webpage to your phone—not just the text without context. It saves the format of the site so that it is no different than looking at the website online. The app does require a one-time purchase of $9.99. When you need to save a web page, you will just have to click on the button next to the web address bar. This triggers the page to be saved so that it can be viewed offline whenever you need. The process is so simple. In the Pro version of the app, you are able to tag pages, making it easier for you to find them later with your own organized system. To access the saved pages, in the app you will click on the button in the middle of the screen on the bottom. Here will be a list of all of your saved pages. To delete a page, simply swipe it and hit the button when the option to delete comes up. Or, you may use the Edit button to mark other pages to be deleted. In the Pro version, you can opt to have websites that you have saved to be automatically updated periodically, allowing you to keep all of your sites current for the next time that you go offline.

Read Offline for Android is a free app for Android devices. This application allows you to download websites onto your phone so that they can be accessed at a later time when you may be offline. The websites are stored locally on your phone's memory, so you will need to make sure that you have the proper storage available. In the end, you will have access to pages that are capable of being browsed quickly, just like if they were actually being accessed online. It is a user friendly app that is compatible with all Android devices, like smartphones or tablets. You will be downloading webpages directly to your phone, ideal for reading websites offline.

I can't stop thinking about this site. It looks like a pretty standard fare; a website with links to different pages. Nothing to write home about except that… the whole website is contained within a single HTML file.

What about clicking the navigation links, you ask? Each link merely shows and hides certain parts of the HTML.

Each

Entire Website Apa Citation

Each link in the main navigation points to an anchor on the page:

And once you click a link, the

Download Entire Website

See that :target pseudo selector? That's the magic! Sure, it's been around for years, but this is a clever way to use it for sure. Most times, it's used to highlight the anchor on the page once an anchor link to it has been clicked. That's a handy way to help the user know where they've just jumped to.

Entire Website

Anyway, using :target like this is super smart stuff! It ends up looking like just a regular website when you click around: